Project Details

The “Snapchat Filter Interface” project combines multiple computational photography features to manipulate an original image and produce an output containing filters and visual effects.

Various filters are available for use, such as a flower crown, beautify filter, and vacation planter. To accomplish these features, the program utilizes outsourced facial detection software, and combines topics learned in 4475 such as: Affine transformations, gaussian blurring, Canny edge detection, image contouring, masking, and other image layering techniques.

Why We Were Inspired

We were inspired by some of the interface design we were learning in our user interface design class as well as another group's project where they utilized face detection through Python. As Gen Z, we are big users of Snapchat and one of their main features is putting filters on users' faces. We wanted to create a project that utilized both of these inspirations.

Example Input and Output Images

Beauty Filter

Here, fake pimples are placed on the cheeks, and through the filter process, they are smoothed out to blend in with the cheeks so that they become unnoticeable. This smoothing region is determined through analyzing the eye dimensions. We selected this picture specifically to demonstrate how even bright apparent blemishes could be diminished using this filter.

Beauty Filter Output Image

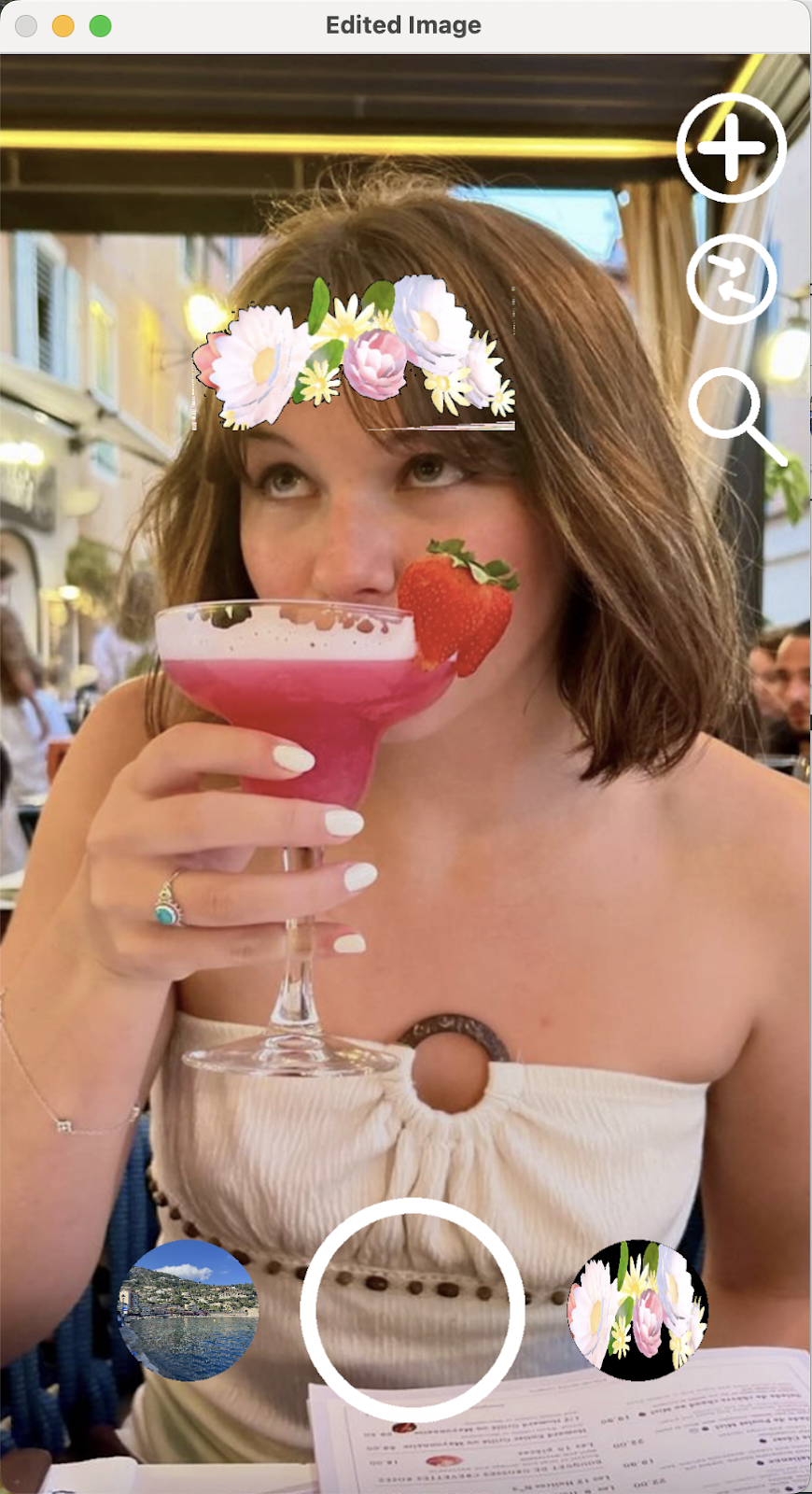

Interface Overlay Output Image

Flower Crown Filter

The flower crown is scaled through calculations based on the size of the head, and rotationally transformed by calculating the arc tan between the eyes. Then the transformed crown is overlaid on the head through analyzing the alpha channels. This picture was selected to demonstrate that only the eyes and face needed to be detected, due to the mouth and nose being less visible here, and also where the eyes were at an angle to demonstrate the affine rotation of the flower crown.

Input Image

Filter and Overlay Interface Output Image

Vacation Background Filter

The vacation background uses Canny edge detection to detect a subject, and through a process of contouring and connecting the edges, a mask is made to overlay the subject onto the background. This image was selected because of edges being more easily detectable with a blank background, allowing for a cleaner overlay onto the background (and also because Sam wasn’t able to go to France with us, so we wanted to add her in).

Input Image

Output Image

Project Functions

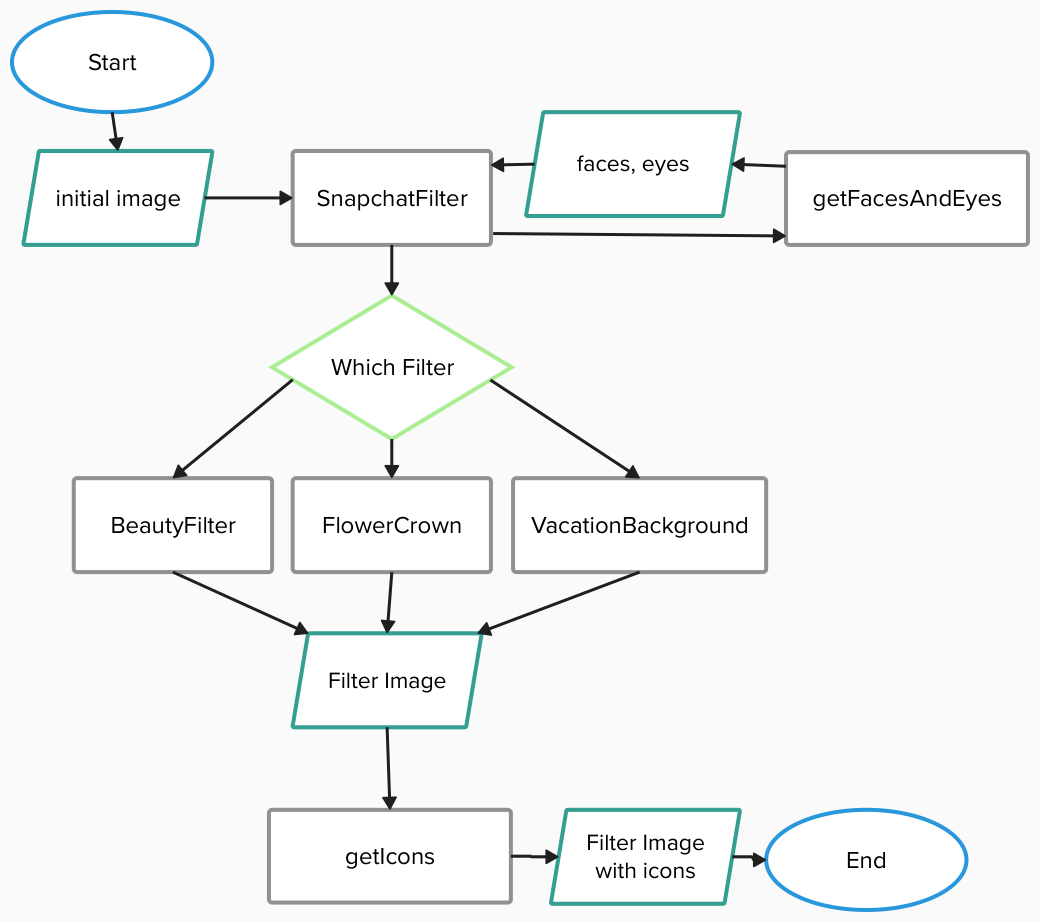

The program utilizes one primary function, SnapchatFilter, which calls upon different helper functions depending on the arguments to produce an output. It takes in a 1920x1080 image, which is the standard size Snapchat uses for images and videos.

getIcons()

This function creates the interface overlays that are presented on the final image, including the plus icon, magnification icon, switch camera, and take photo button. To make these, cv2 was used along with line and shape manipulation. This function returns a tuple of all 6 icons that are eventually overlaid.

SnapchatFilter(img, filter)

This function takes in the main image, and a string that states the desired filter. It starts by resizing the image to ensure it fits the standard format of 1920x1080 pixels. It then calls the helper function getFacesAndEyes to pass through to any filter function, followed by the helper function that corresponds to the filter. Finally, it overlays the interface icons on the image and returns it.

getFacesAndEyes(grayImg)

This function is called from the SnapchatFilter function, and takes in a grayscale representation of the original image. It uses the face_cascade and eye_cascade files downloaded from the source code to detect faces and eyes from the image argument. It returns a tuple, (faces, eyes) where each is a list composed of tuples that represent each feature's x and y coordinate, along with the length and width.

FlowerCrown(img, faces, eyes)

This filter takes in the initial image along with scanned features to apply the flower crown. To apply the flower crown, multiple transformations are completed based on information from the feature detections:

- The flower crown is proportionally resized based on the face width value.

- The center of each eye is calculated to calculate a rotation angle. From there, the flower crown has an affine transformation that rotates it around the center.

- Once the crown is resized and rotated, it is overlaid onto the first image by iterating through the pixels and changing the pixel value when the alpha channel of the crown is visible.

BeautyFilter(img, faces, eyes)

This filter uses the facial features and initial image to edit out blemishes on the individual's cheeks. To start, the cheek area is approximated based on the location and shape of the detected eyes. From there, each cheek is saved as its own array, and a box filter blur is applied. After this, the edited cheek regions are sliced back into the main image, resulting in a heavy blur being applied specifically to the cheek area.

VacationBackground(img, faces, eyes)

This filter conducts an analysis of the original image to detect outlines, and uses that information to stitch the subject of the image into the background. To do this, the original image is converted to a grayscale representation, smoothed, and represented through cv2.Canny. Once the outlines have been established, the edges are dilated using a kernel and connected using by detecting contours. cv2.DrawContours is then used to create a mask, where the subject is the white part of the mask, and everything else is black.

The mask is used to stitch the background scenic image and the main image together. When using the mask to blend the two images together, the mask was split into three channels and a gaussian blur was applied so that the edges wouldn't be as rough for the final output image.

Project Flowchart

Flowchart

Outside Code

Outside code was sourced for the facial detection features, linked here. The eye and frontal face cascade XML were used in the findFacesAndEyes function for facial feature detection.